Below is my analysis of mobile applications reviews using Python. This data has been cleaned in my previous post, Data Manipulation with Natural Language Processing.

In addition, I manually scored 200 randomly selected reviews with sentiment scores between -5 and 5. -5 means the review is a negative comment, +5 is a positive comment, and 0 is a neutral comment. The purpose of this is to create training data to later feed into a machine learning algorithm that predicts sentiment scores.

Metadata

Drop duplicates and record how many unique reviews are collected?

There are 1,478,938 unique reviews collected.

How many unique apps are in the dataset? How many apps in each of the 8 specified app categories?

There are 86 unique apps. The count of apps in each of the 8 specified app categories is below.

category

EDUCATION 10

ENTERTAINMENT 13

FAMILY 10

FINANCE 10

GAME_ACTION 11

HEALTH_AND_FITNESS 10

LIFESTYLE 12

MUSIC_AND_AUDIO 11

What happens when we drop reviews that contain less than 2 words?

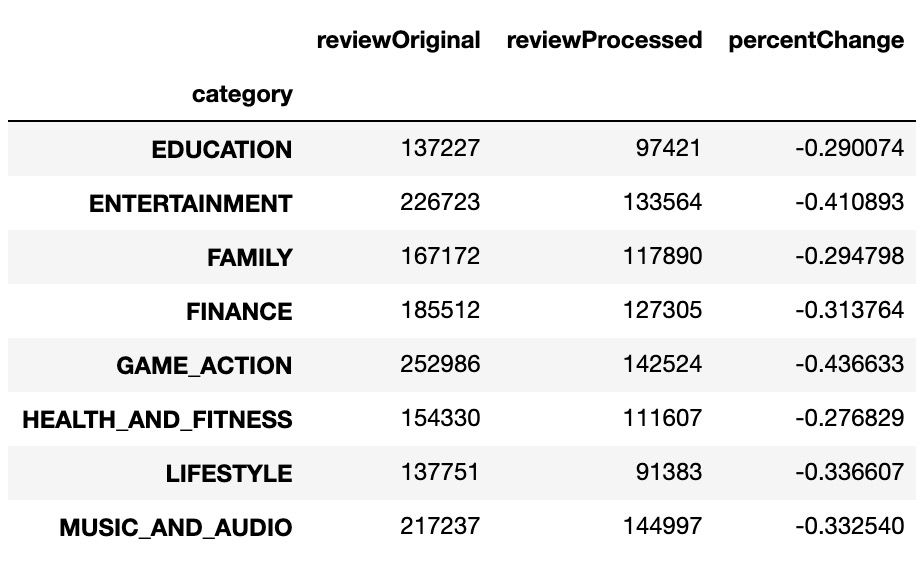

The count of reviews in each of the eight app-categories is below. The percenChange indicates the change when comparing to the original dataset. Interestingly, the Game Action app category is showing a very large decrease in number of reviews from the original dataset.

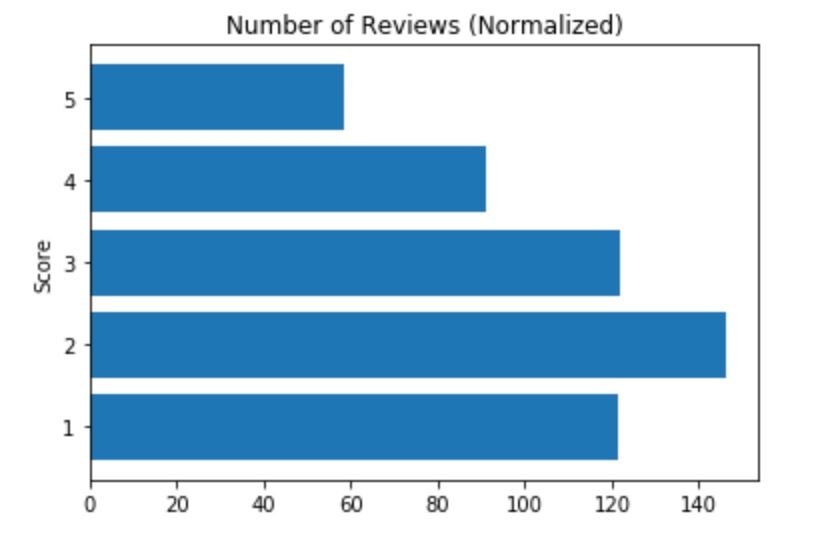

Number of reviews for each score

After normalizing the numbers, there is a great proportion of reviews with score 5 and score 1. This is a pattern with many customer review surveys, because people tend to complete reviews if they absolutely love or hate a product.

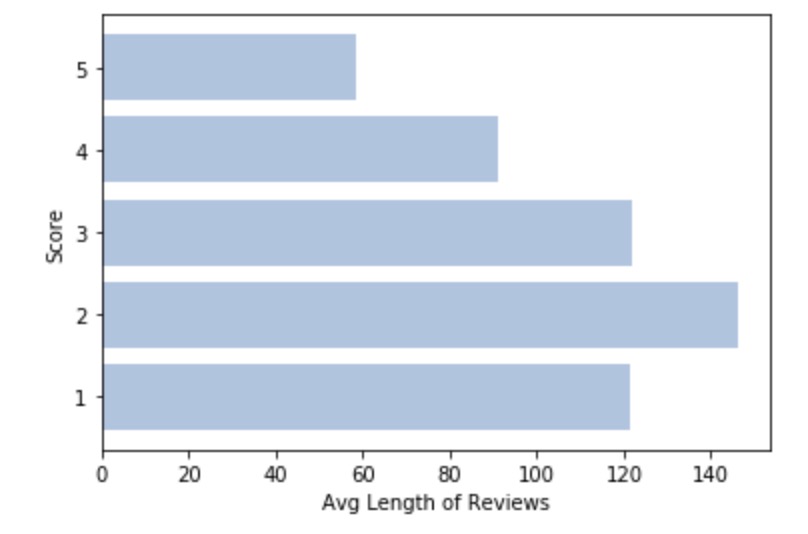

Average length of reviews in each score-sub-group

The average length of the reviews in each score-sub-group is below. The three lowest scores have the highest average length of reviews. This makes sense because people that dislike a product may be more vocal in explaining their distaste.

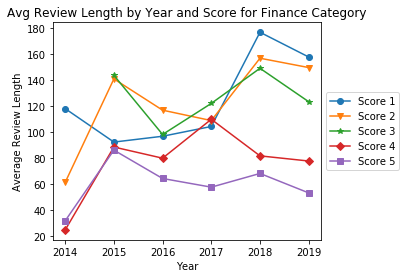

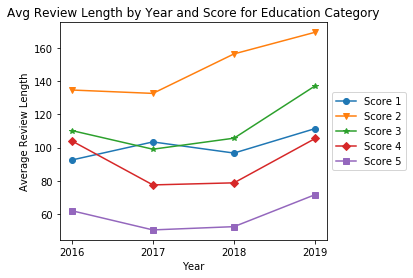

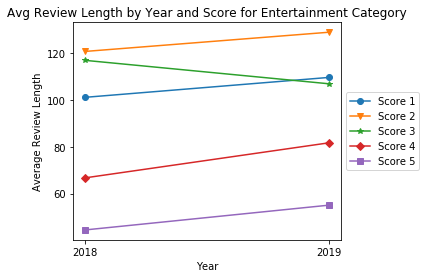

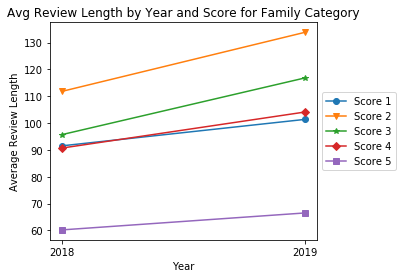

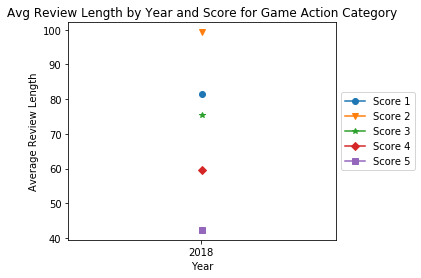

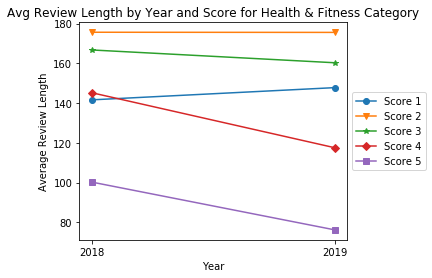

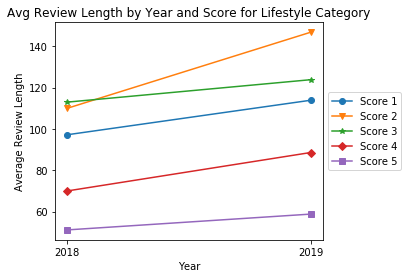

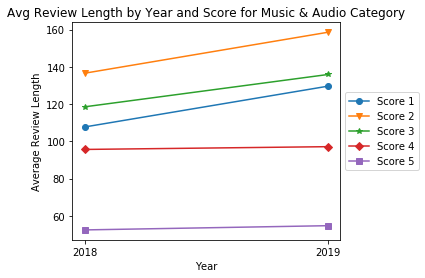

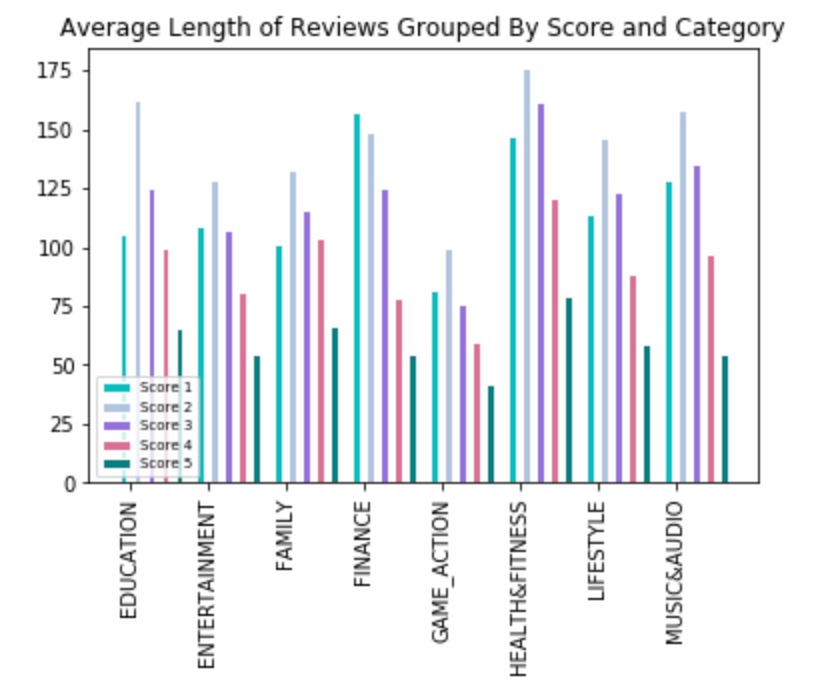

Average length of reviews in each score-sub-group by category

The average length of reviews in each score-sub-group in 8 app categories is below. All the app categories have the last three lowest scores with the highest average length of reviews, similar to question 13. Finance is the only app category where Score 2 does not have the highest average length, but Score 1 does.

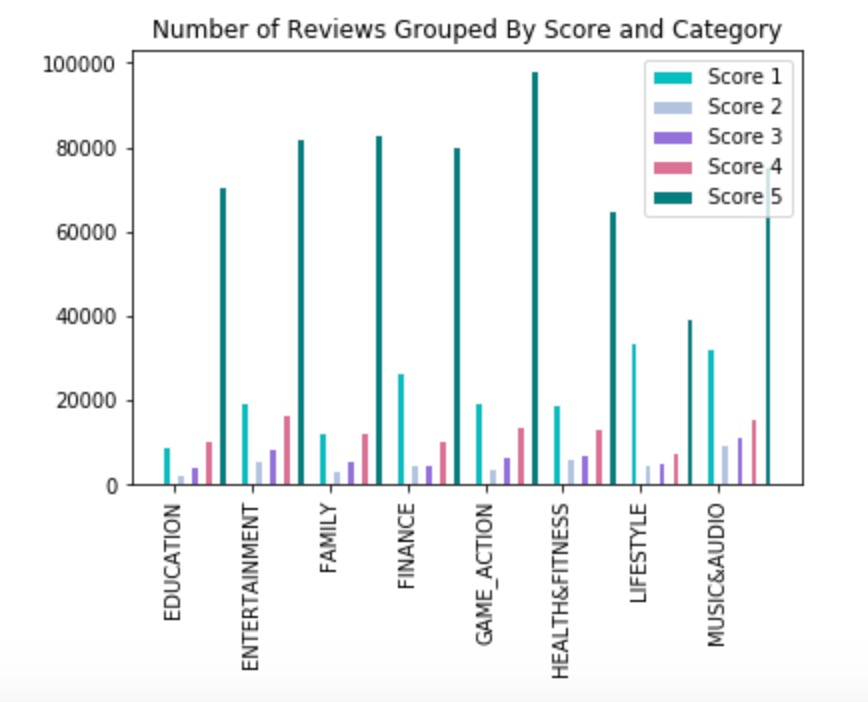

Number of reviews in each score-sub-group by category

Each category has the largest proportion in Score 5 and Score 1. This is a pattern with many customer review surveys, because people normally complete reviews they feel strongly about a product. High proportions in Score 1, because they love it, even higher proportions for Score 5 when they’re really unhappy with a product.

Correlation between the length of reviews and the score in each category

Based on the correlation, there is a negative correlation between length of reviews and the score in each app-category. This is in line with our previous analysis. Previously, we noticed the lower scores are higher in average review length for all app categories, which is indicative of a negative correlation.

category

EDUCATION -0.632214

ENTERTAINMENT -0.861607

FAMILY -0.638768

FINANCE -0.974997

GAME_ACTION -0.859411

HEALTH_AND_FITNESS -0.792682

LIFESTYLE -0.788759

MUSIC_AND_AUDIO -0.824784

Name: score, dtype: float64

Does the correlation between star rating and length of reviews change over time?

I would expect all categories to show the same trend for every year as shown in my previous analysis. My previous analysis had been lower scores have larger average review lengths overall. After reviewing all 8 line graphs for each of the app categories, the trend remains the same throughout the years. Score 4 and Score 5 remain the lowest in Average Review Lengths while Score 1, Score 2, and Score 3 remain the largest in Average Review Lengths. Score 2 stays as the top Average Review Length throughout the years for all the application categories, but not for Finance in the year 2014, 2018, and 2019.